|

Open Lighting Architecture

0.9.5

|

|

Open Lighting Architecture

0.9.5

|

A common question that comes up is "How can I communicate with olad using something other than C++, Python or Java?".

The bad news is that unless someone has written a client library for your language, this requires a bit of work. The good news is that there are people willing to help and if you do a good job when writing the client library, we'll incorporate it into the OLA codebase and maintain it going forward.

This section provides an introduction to Remote Procedure Calls (RPC). If you have used RPCs in other situations (JMI, CORBA, Thrift, etc.) you may want to skip this bit.

Remote procedure call has some good background reading.

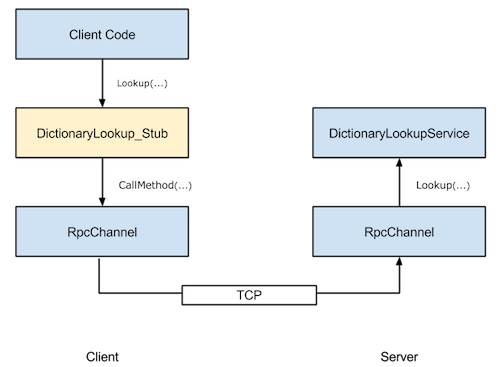

On the client side there is a stub, usually a class. Each stub method takes some request data and some sort of callback function that is run when the remote call completes. The stub class is auto-generated from the protocol specification. The stub class is responsible for serializing the request data, sending it to the remote end, waiting for the response, deserializing the response and finally invoking the callback function.

On the server side there is a usually a 'Service' class that has to be provided. Usually an abstract base class is generated from the protocol specification and the programmer subclasses this and provides an implementation for each method that can be called.

Because the client stub is autogenerated their can be a lot of duplicated code which constructs the request message, sets up the completion callbacks and invokes the method. Rather than forcing the users of the client library to do duplicate all this work, there is usually a layer in between that provides a friendly API and calls into the stub. This layer can be thin (all it does is wrap the stub functions) or thick (a single API call may invoke multiple RPCs).

These client APIs can be blocking or asyncronous. Blocking clients force the caller to wait for the RPC to complete before the function call returns. Asyncronous APIs take a callback, in much the same way as the stub itself does.

OLA uses Protocol Buffers as the data serialization format. Protocol buffers allow us to wrap the request and response data up into a message (similar to a C struct) and then serialize the message to binary data in a platform independent way. It's worth reading the Protocol Buffers documentation before going further. You don't need to understand the binary format, but you should be familiar with the Protocol Buffer definition format.

As well as data serialization, protobufs also provide a basic framework for building RPC services. Early versions of protobufs came with a service generator. The generic service generator has been deprecated since the 2.4.0 release since code generated was rather inflexible (in trying to be all things to all people it left many needs un-addressed).

Let's look at a simple example for a dictionary service, Dictionary.proto. The client sends the word its looking for and the server replies with the definition and maybe some extra information like the pronunciation and a list of similar words.

message WordQuery {

required string word = 1;

}

message WordDefinition {

required string definition = 1;

optional string pronunciation = 2;

repeated string similar_words = 3;

}

service DictionaryLookup {

rpc Lookup(WordQuery) returns(WordDefinition);

}We can generate the C++ code by running:

protoc --plugin=protoc-gen-cppservice=protoc/ola_protoc_plugin \ --cppservice_out ./ Dictionary.proto

The generated C++ stub class definition in Dictionary.pb.h looks something like:

class DictionaryLookup_Stub {

public:

DictionaryLookup_Stub(::google::protobuf::RpcChannel* channel);

void Lookup(\::google::protobuf::RpcController* controller,

const \::WordQuery* request,

\::WordDefinition* response,

\::google::protobuf::Closure* done);

};As you can see the 2nd and 3rd arguments are the request and response messages (WordQuery and WordDefinition respectively). The 4th argument is the completion callback and the 1st argument keeps track of the outstanding RPC.

An implementation of DictionaryLookup_Stub::Lookup(...) is generated in Dictionary.pb.cc. Since the only thing that differs between methods is the request / response types and the method name, the implementation just calls through to the RpcChannel class:

void DictionaryLookup_Stub::Lookup(\::google::protobuf::RpcController* controller,

const \::WordQuery* request,

\::WordDefinition* response,

\::google::protobuf::Closure* done) {

channel_->CallMethod(descriptor()->method(0),

controller, request, response, done);

}Protocol buffers doesn't provide an implementation of RpcChannel, since it's implementation specific. Most of the time the RpcChannel uses a TCP connection but you can imagine other data passing mechanisms.

As part of writing a new client you'll need to write an implementation of an RpcChannel. The C++ implementation is ola::rpc::RpcChannel.

Putting it all together produces the flow shown in the diagram below. The yellow code is auto-generated, the blue code has to be written.

By default, olad listens on localhost:9010 . Clients open a TCP connection to this ip:port and write serialized protobufs (see RPC Layer) to the connection.

Why TCP and not UDP? Mostly because the original developer was lazy and didn't want to deal with chunking data into packets, when TCP handles this for us. However TCP has it's own problems, with Head of line blocking. For this reason RPC traffic should only be used on localhost, where packet loss is unlikely. If you want cross-host RPC you should probably be using E1.31 (sACN) or the Web APIs.

The OLA client libraries follow the thin, asyncronous client model. There is usually a 1:1 mapping between API methods and the underlying RPC calls. The clients take the arguments supplied to the API method, construct a request protobuf and invoke the stub method.

The exception is the Java library, which could do with some love.

The Python client continues to use the service generator that comes with Protocol Buffers. The C++ client and olad (the server component) has switched to our own generator, which can be found in protoc/ . If you're writing a client in a new language we suggest you build your own generator, protoc/CppGenerator.h is a good starting point.

If you don't know C++ don't worry, we're happy to help. Once you know what your generated code needs to look like we can build the generator for you.

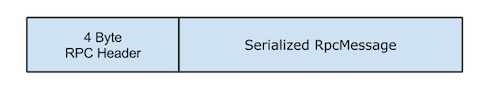

To make the RPC system message-agnostic we wrap the serialized request / response protobufs in an outer layer protobuf. This outer layer also contains the RPC control information, like what method to invoke, the sequence number etc.

The outer layer is defined in common/rpc/Rpc.proto and looks like:

enum Type {

REQUEST = 1;

RESPONSE = 2;

...

};

message RpcMessage {

required Type type = 1;

optional uint32 id = 2;

optional string name = 3;

optional string name = 3;

optional bytes buffer = 4;

}The fields are as follows:

So putting it all together, the RpcChannel::CallMethod() should look something like:

void RpcChannel::CallMethod(const MethodDescriptor *method,

RpcController *controller,

const Message *request,

Message *reply,

SingleUseCallback0<void> *done) {

RpcMessage message;

message.set_type(REQUEST);

message.set_id(m_sequence.Next());

message.set_name(method->name());

string output;

request->SerializeToString(&output);

message.set_buffer(output);

Send the message over the TCP connection.

return SendMsg(&message);

}That's it on the sender side at a high level, although there is a bit of detail that was skipped over. For instance you'll need to store the sequence number, the reply message, the RpcController and the callback so you can do the right thing when the response arrives.

Finally, since protobufs don't contain length information, we prepend a 4 byte header to each message. The first 4 bits of the header is a version number (currently 1) while the remaining 28 bits is the length of the serialized RpcMessage.

When writing the code that sends a message, it's very important to enable TCP_NODELAY on the socket and write the 4 byte header and the serialized RpcMessage in a single call to write(). Otherwise, the write-write-read pattern can introduce delays of up to 500ms to the RPCs, Nagle's algorithm has a detailed analysis of why this is so. Fixing this in the C++ & Python clients led to a 1000x improvement in the RPC latency and a 4x speedup in the RDM Responder Tests, see commit a80ce0ee1e714ffa3c036b14dc30cc0141c13363.

The methods exported by olad are defined in common/protocol/Ola.proto . If you compare the RPC methods to those provided by the ola::client::OlaClient class (the C++ API) you'll notice they are very similar.

To write a client in a new language you'll need the following:

I'd tackle the problem in the following steps:

Once that's complete you should have a working RPC implementation. However it'll still require the callers to deal with protobufs, stub objects and the network code. The final step is to write the thin layer on top that presents a clean, programmer friendly API, and handles the protobuf creation internally.

The last part is the least technically challenging, but it does require good API design so that new methods and arguments can be added later.

A quick example in Python style pseudo code. This constructs a DmxData message, sends it to the server and the extracts the Ack response.

# connect to socket

server = socket.connect("127.0.0.1", 9010)

# Build the request

Ola_pb2.DmxData dmx_data

dmx_data.universe = 1

dmx_data.data = .....

# Wrap in the outer layer

Rpc_pb2.RpcMessage rpc_message

rpc_message.type = RpcMessage::REQUEST

rpc_message.id = 1

rpc_message.name = 'UpdateDmxData'

rpc_message.buffer = dmx_data.Serialize()

server.write(rpc_message.Serialize())

# wait for response on socket

response_data = server.recv(1000)

header = struct.unpack('<L', response_data)[0]

# grab the first 4 bytes which is the header

version, size = ((header & 0xf0000000) >> 28, header & 0x0ffffff)

if version != 1:

# Bad reply!

return

response = Rpc_pb2.RpcMessage()

response.ParseFromString(response_data[4:size])

if message.type != RpcMessage::RESPONSE:

# not a response

return

if message.id != 1:

# not the response we're looking for

return

Ola_pb2.Ack ack

ack.ParseFromString(response.buffer)  1.8.1.2

1.8.1.2